What does the European AI Act provide for?

The AI Act of the European Union, the first bill in the world on the regulation of artificial intelligence, continues its path in Brussels, where an agreement was also reached on the ban on biometric surveillance, emotion recognition and police systems predictive. All the details (and some controversy)

The journey of the AI Act, the European law proposal aimed at regulating the use of artificial intelligence (AI) – an absolute first in the world – continues. Indeed, yesterday the European Parliament gave the first green light to the measure and approved a series of amendments, including precise limits on facial recognition technologies, considered one of the greatest threats to the protection of the fundamental rights of consumers and citizens.

Also MEPs Brando Benifei of the Democratic Party, co-rapporteur of the law text together with Dragoş Tudorache of Renew Europe, andVincenzo Sofo of Fratelli d'Italia spoke to Start precisely about this very sensitive issue on which everyone agrees that action is needed at European level.

STEPS FORWARD ON AI ACT

With 84 votes in favour, 7 against and 12 abstentions, the MEPs approved the EU Parliament's position on the AI Act, which aims to establish European rules to regulate artificial intelligence in its uses and above all in its influence on people's daily lives.

The text was approved during the joint meeting of the Internal Market and Consumer Protection (Imco) and Civil Liberties, Justice and Home Affairs (Libe) committees of the EU Parliament.

FOCUS ON BIOMETRIC RECOGNITION

The political groups also reached an agreement on the ban on real-time biometric recognition in public places –an idea cherished by the Minister of the Interior , Matteo Piantedosi, who proposed it for hospitals, commercial areas and stations in Rome, Naples and Milan. where, however, only a few days agohe declared that in the Lombard capital "there is no security emergency".

In this regard yesterday Benifei said : "With this position we clearly tell Piantedosi and his colleagues who had the same opinions as you that we do not intend to give in to an idea of a controlled society where everyone is supervised and can be monitored for a false idea of security" .

THE NEXT STAGES

Now we await the plenary vote, expected between 12 and 15 June, to start the trilogues, or inter-institutional negotiations, with the EU Council. The goal is to complete the project by spring 2024, which also coincides with the end of the legislature and the European elections.

THE RISK SCALE OF THE AI ACT

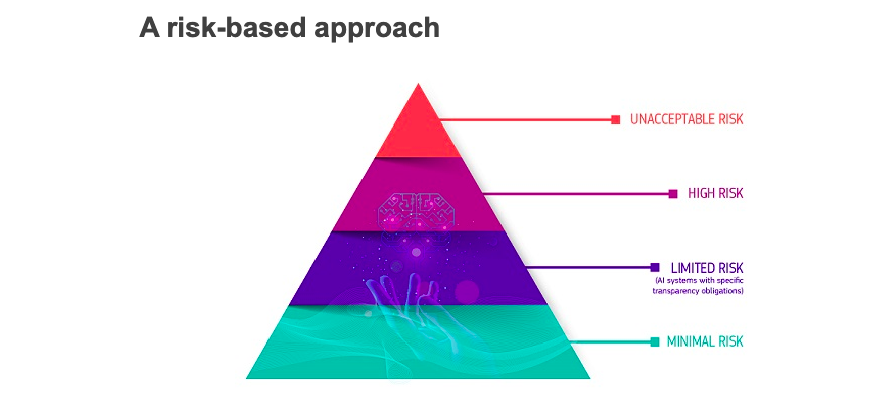

The AI Act, in addition to proposing rules common to the Twenty-seven, identifies the risks that AI can pose when it comes into contact with human beings. However, not all are considered equally.

As Benifei explained to Start , there are risks considered higher by the regulation, such as those that concern fundamental rights, health or safety and for which producers of AI systems are therefore required certification procedures that need, among various things, a check on the quality of the data, on human control, on the explainability of the algorithms.

Then there are systems whose uses are instead considered to be at an unsustainable risk and, therefore, prohibited, as in the case of biometric recognition cameras in public spaces, predictive police and the use of AI for emotional recognition in some areas.

Also for Sofo, the AI Act must be a tool that sets limits where technology leads to the violation of privacy, the right to the processing of personal data and social scoring , i.e. the social credit system devised by China to classify the reputation of its citizens.

Sofo had also given the example of what is already happening in the Metaverse, where the use of data and the creation of avatars make it possible to study behavior and obtain predictive analyzes even on political opinions, with obvious consequences on personal freedom and the manipulation of people .

THE AI RISK PYRAMID

To establish the risks at a common level, the text of the law provides for a pyramid of four levels: minimum (AI-enabled video games and spam filters), limited (chatbots), high (scoring of school and professional exams, sorting of resumes, assessing the reliability of evidence in court, robot-assisted surgery) and unacceptable (anything that poses a “clear threat to people's safety, livelihoods and rights”, such as being awarded a social score' by governments).

For low-risk systems no intervention is foreseen, for those at a limited level there are requests for transparency. However, high-risk technologies must be regulated and those of a level considered unacceptable are prohibited.

WHAT ABOUT RESEARCH?

However, regulating AI does not mean closing to innovation and progress and for this reason MEPs have provided for exemptions for research activities and for AI components supplied with open-source licenses.

Indeed, Benifei spoke to Start about "limited limits" for research since the regulation concerns not the study but the final product placed on the market and which interacts with human beings. On the contrary, he called for greater joint action at the European level also in order to be able to compete with powers such as the United States and China.

On this, Sofo also said that it is necessary to find a balance between the protection of freedom, of fundamental rights and research, the development of a technology which will go ahead in the world in any case and which otherwise will be imported if we do not have strategic European autonomy.

This is a machine translation from Italian language of a post published on Start Magazine at the URL https://www.startmag.it/innovazione/ai-act-fa-un-passo-avanti-e-chiude-alla-societa-del-controllo/ on Fri, 12 May 2023 09:31:29 +0000.